World Check – Next Generation

World-Check is a database of Politically Exposed Persons (PEPs) and heightened risk individuals and organisations, used around the world to help identify and manage financial, regulatory and reputational risk.

The creation of the database was in response to legislation aimed at reducing the incidence of financial crimes. To begin with, World-Check’s intelligence was used by banks and financial institutions as a comprehensive solution for assessing, managing and remediating risk. However, as legislation has become increasingly complex and its reach has become increasingly global, the demand for such intelligence has grown beyond the financial sector to include organisations from all sectors.

Challenge

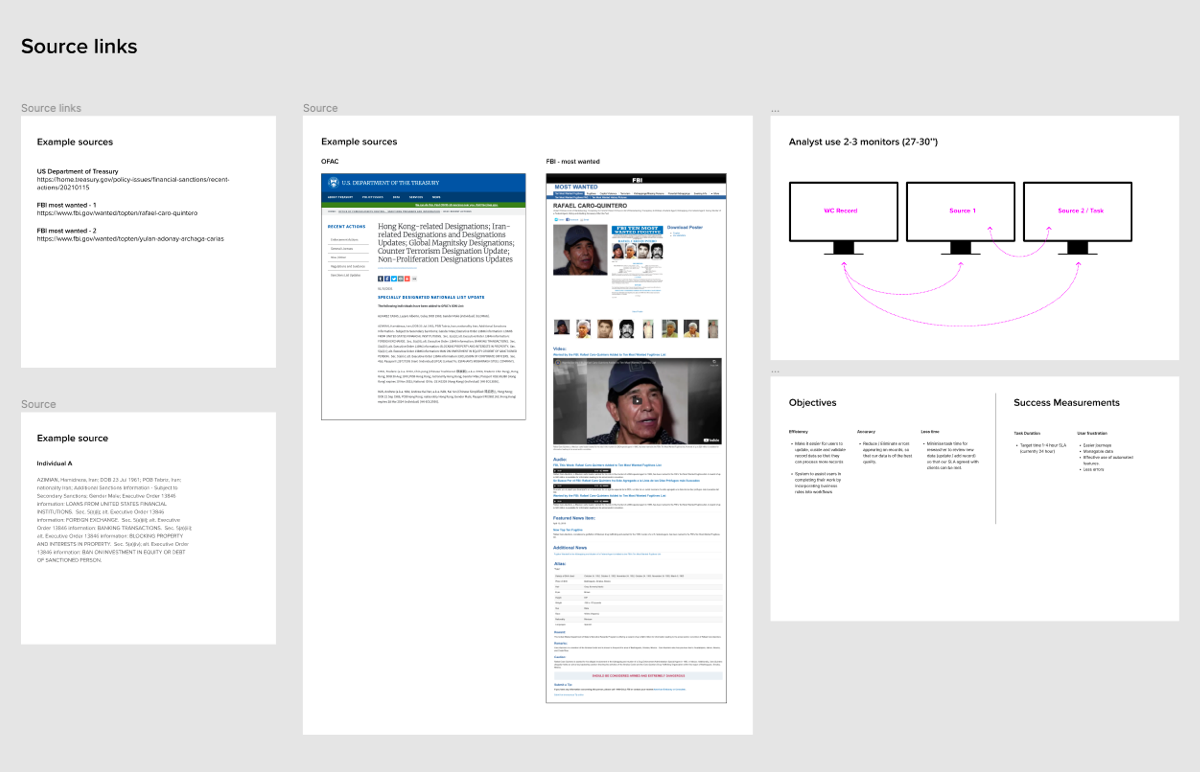

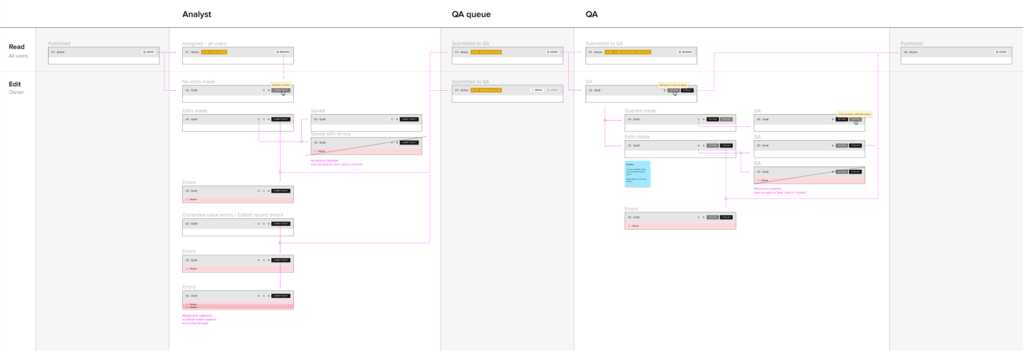

All entity profiles and their data are hosted in Dante platform as records. Data is coming from multiple types of existing or new sources, that need to be constantly monitored by analysts in order to keep the database up to date. That is currently done via SalesForce, where all data update requests are formed into tasks. Tasks are then allocated to analysts, who update the profiles / records and submit to QA for approval. All tasks and updates information are captured for audit purposes.

To support the increase of content (15-20% per year) and the need to provide more advance and accurate data, World Check Next Generation project is merging these 2 platforms in 1, using IBM BAW (Business Automation Workflows). The main objectives are quicker user journeys, better handling of data curation process and minimal data duplications. These will be achieved by introducing:

- automated features in multiple areas of both curation and maintenance journey

- source keyword tagging for all data entries

- enhanced data structure

Approach

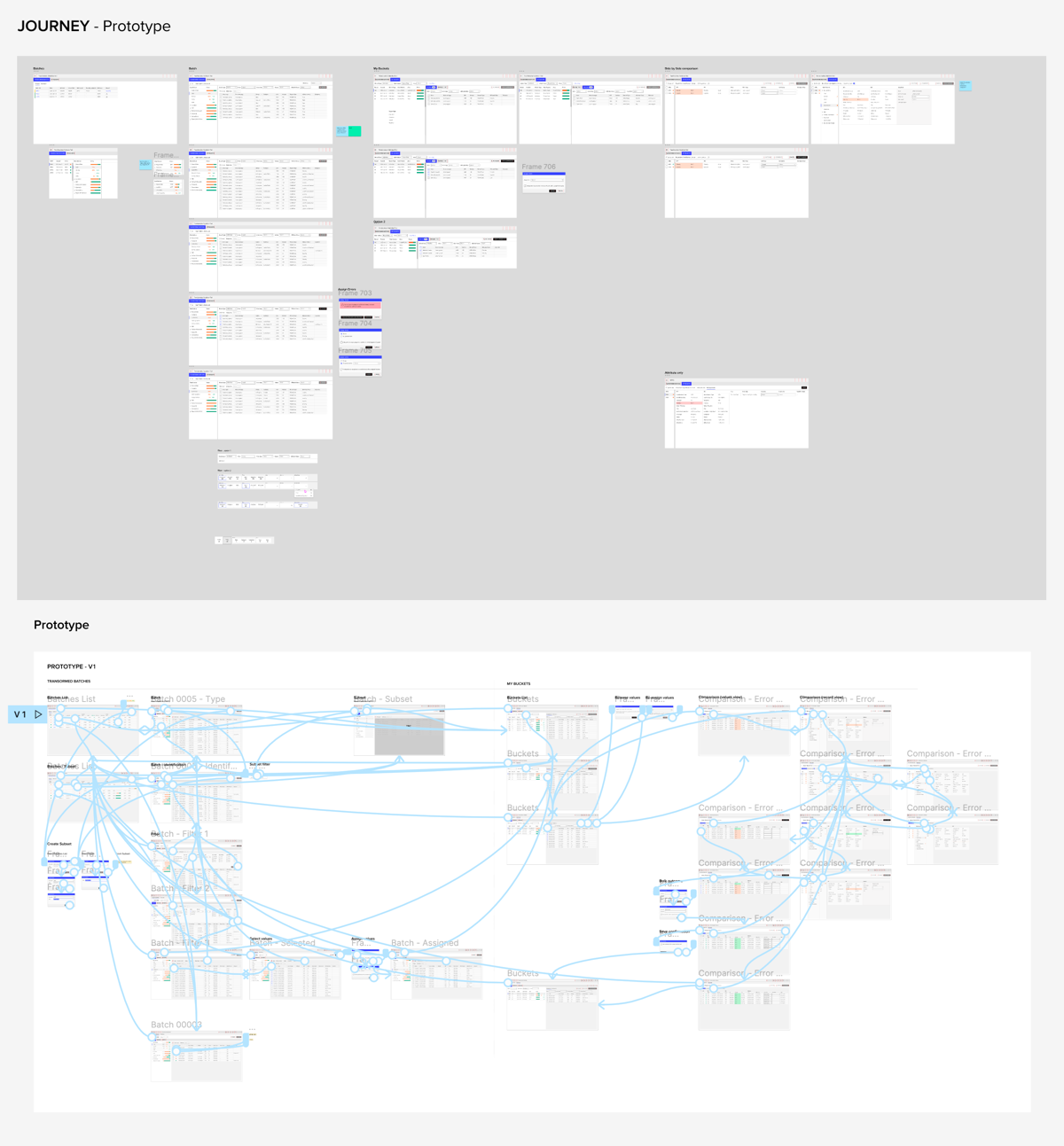

The first problem of the project was that it was not clear where to start from. There was no definitive plan / brief that would capture all objectives and requirements in order to then set priorities and monitor success measurements. As a new member of the team I started by exploring all the different areas and processes through multiple user demos and interviews. The aim was to create a general map that would help me understand the broader product, the multiple use cases and actors involved, in order to gradually create a ‘skeleton’ of the end-to-end journey and an iterative process around it.

Through this discovery phase a main brief was created, that was then broken down to several mini briefs to tackle each defined area. Briefs included:

- Objectives and problems

- Key personas and scenarios

- Success measurements

- Priorities and timelines

- Design process steps and what is required from BAs and Development team per step

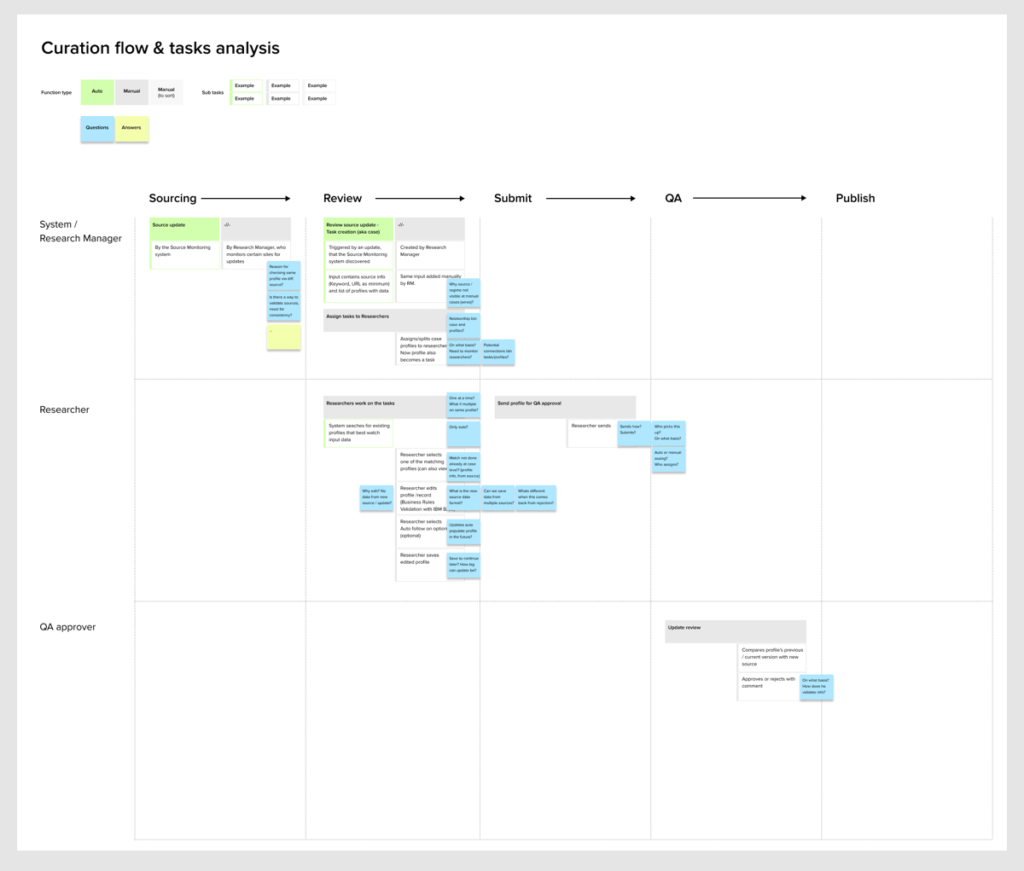

Discovery flows

Initial high-level flow to understand and compare existing manual vs proposed auto tasks across the curation journey. Mapping of these tasks to the actors involved and trying to identify which can be done both ways or not.

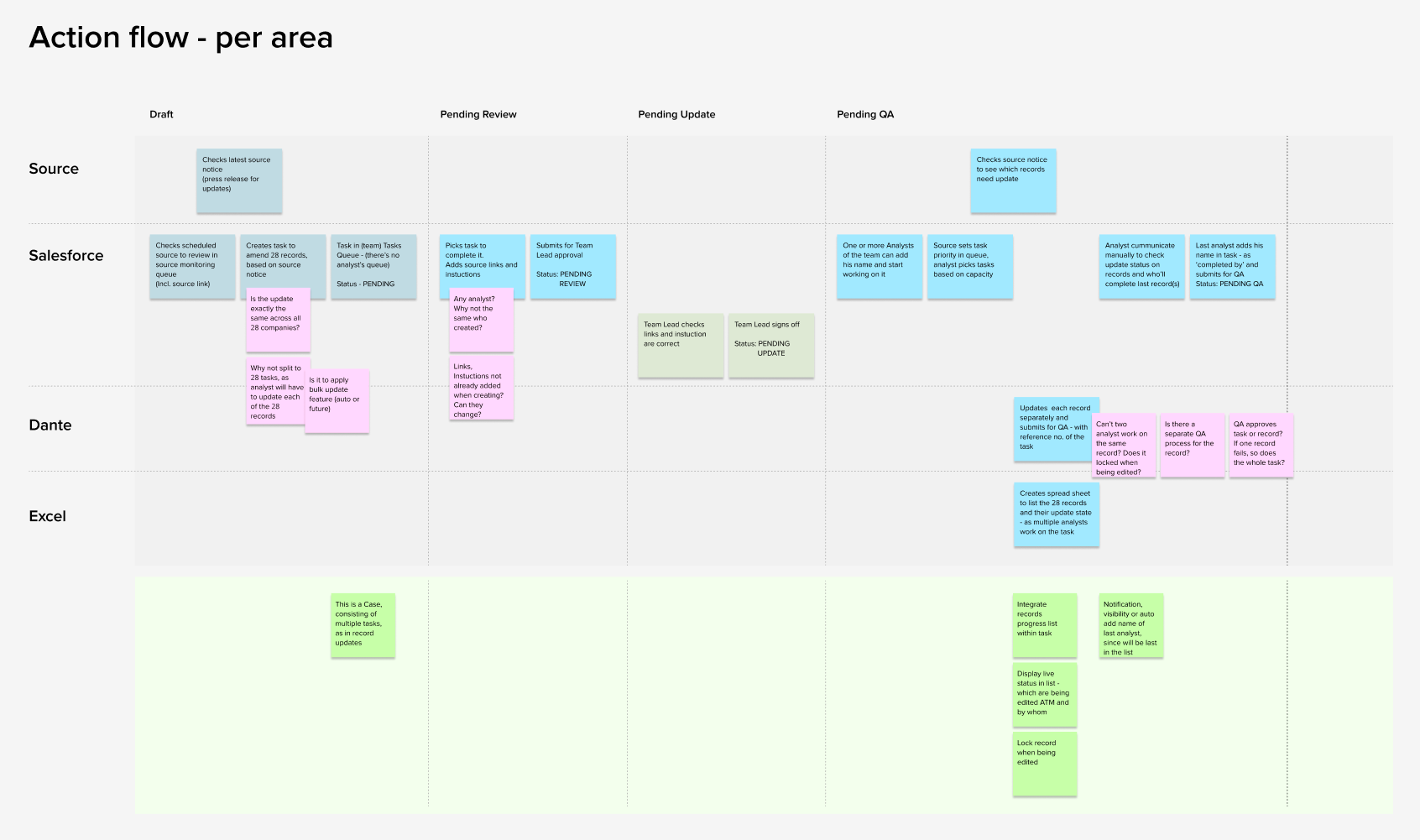

More specific flow to capture all actions performed within each Record Task state, as well as the platform/tool that is used in the current structure

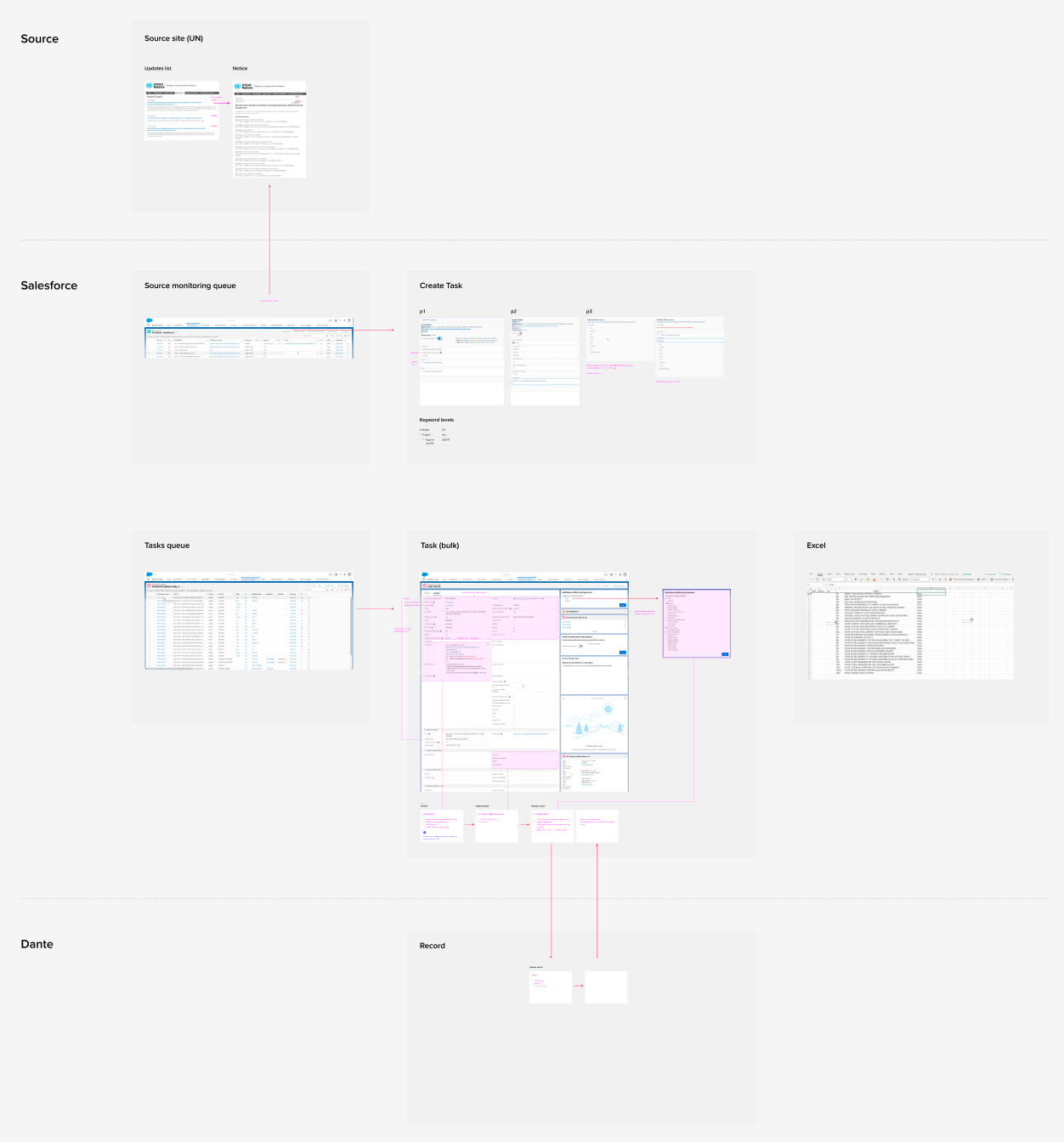

followed by page extracts / mockups to depict and further define these actions.

Ideation flows & concepts

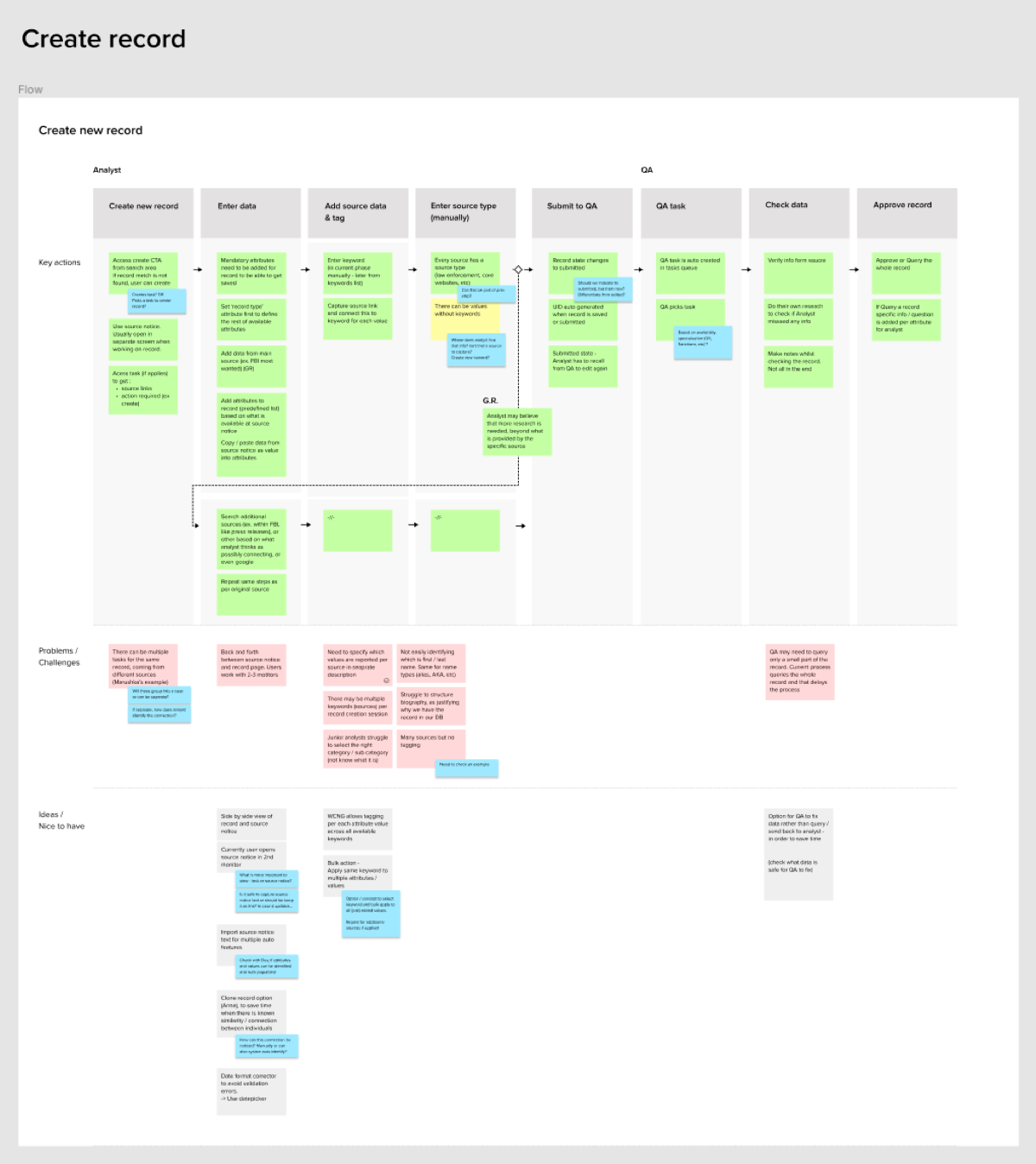

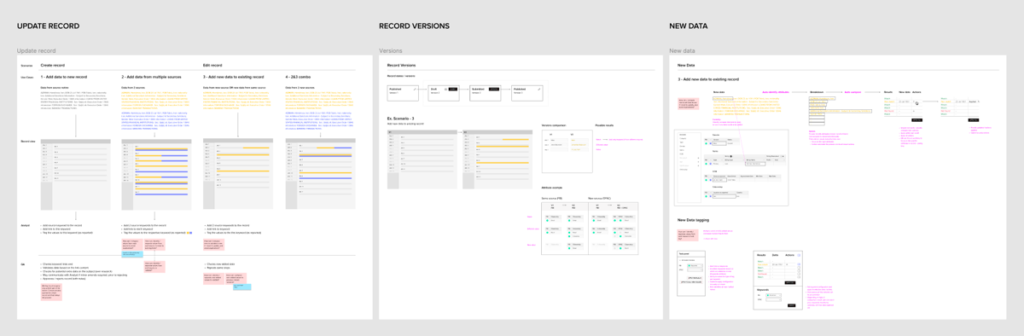

Main flows were provided by BAs, which were later analysed with users to capture all steps and actions, in order to identify challenges and new ideas / nice to have features.

Data update types between record versions. Initial concept of using APIs to break down new source data and display comparison of only updated entries for analyst to approve and apply to record.

That concept later evolved to the ‘Summary of changes’ dashboard / filter feature.

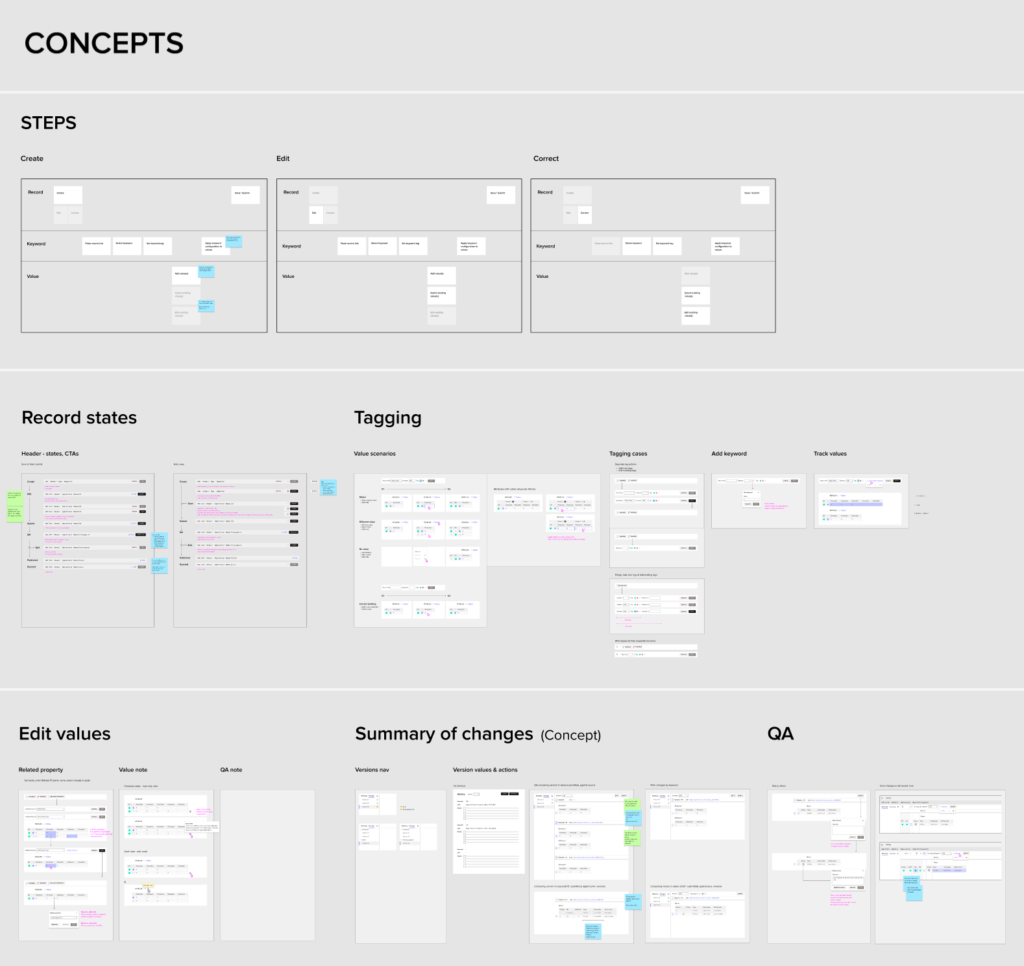

Early designs on key features

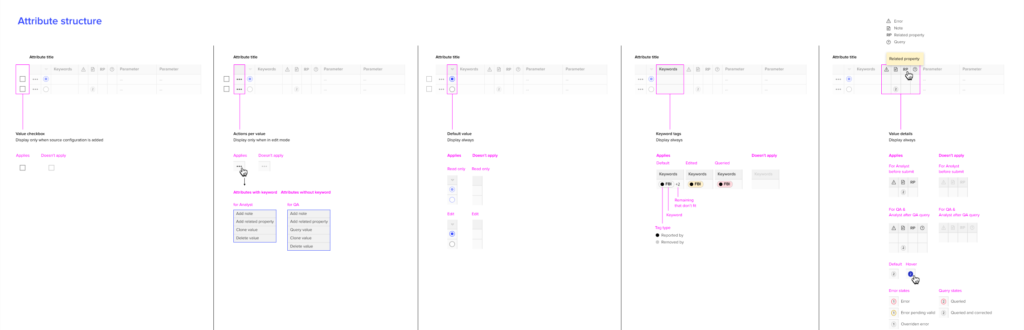

Component templates

Tagging

States, actors, actions

Validation Tool

All records need to get migrated from the old platform to new one and vice versa, as certain clients may not upgrade to the new platform. The content structure between the 2 platforms is different, so errors can occur during the migration. Validation tool identifies the errors and also merges all data to display in the actual WCNG record structure. This way analysts can assign values with errors (issues) of the same attribute and validate them in bulk.

Previous ProjectNext Project

Previous ProjectNext Project

- Categories:

- Share Project :

Previous ProjectNext Project

Previous ProjectNext Project